Stories

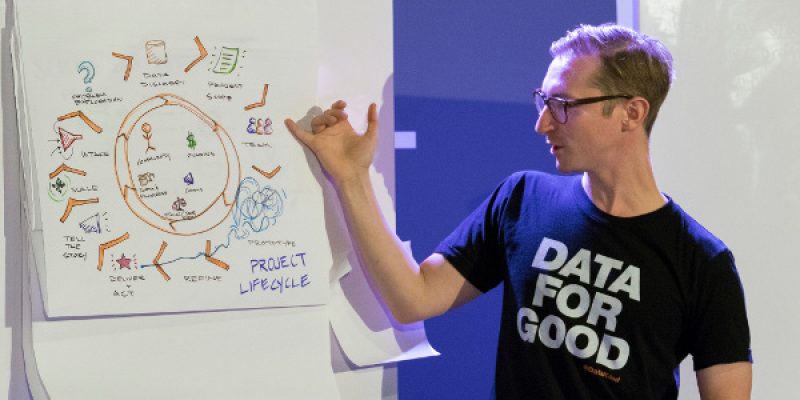

Jake Porway presents on the life cycle of social-sector data science projects at DataKind’s Global Chapter Summit in 2015. (Photo: DataKind)

Imagining the Possibilities of Data Science

DataKind’s Jake Porway on defining problems, employing social design, and taking data science in new directions

Jake Porway believes we can mobilize artificial intelligence to get smarter about meeting human needs. As the founder and Executive Director of the nonprofit DataKind, he helps the social sector tackle complex problems like hunger, homelessness and traffic safety—by applying the same technologies that companies use to recommend movies and route delivery trucks.

Since 2011, DataKind has grown into a global network of thousands of volunteers skilled in data analysis, coding and visualization, as well as a staff of professional data scientists and community builders. Through its weekend-long DataDive events and multi-month DataCorps and Labs projects, the organization brings these technical experts together with governments, nonprofits and foundations to collaborate on data-driven solutions to essential problems.

Following an early grant that helped put DataKind on the path to sustainability, the Rita Allen Foundation supported the creation of DataKind’s new Labs Blueprint. The Blueprint offers guidance for launching and executing data science projects for social change. It incorporates lessons and examples from DataKind Labs’ inaugural project, which used data science to support the Vision Zero movement to reduce traffic-related deaths and injuries in New York City, Seattle and New Orleans.

Following an early grant that helped put DataKind on the path to sustainability, the Rita Allen Foundation supported the creation of DataKind’s new Labs Blueprint. The Blueprint offers guidance for launching and executing data science projects for social change. It incorporates lessons and examples from DataKind Labs’ inaugural project, which used data science to support the Vision Zero movement to reduce traffic-related deaths and injuries in New York City, Seattle and New Orleans.

I recently spoke with Jake about the ingredients of successful collaborations and changes in technology that are influencing his work and his outlook.

—Elizabeth Good Christopherson

President and Chief Executive Officer

Rita Allen Foundation

You emphasize collaboration at DataKind—you use the phrase “network weaving” to describe a critical aspect of your approach. What insights have you gathered from collaborating with groups with very different expertise?

We say we’re a network weaver, helping connect the folks who, if they only knew each other and knew how to work together, could create fundamentally great things for the world. We can be a catalyst for them for creating large-scale social change.

We’ve been thinking of ourselves as half data-science consultancy, half social-design firm. We have to figure out, from the data-science consultancy side, what are the technical requirements? If we’re going to work with the Red Cross and help them identify where they should put smoke detectors, do we have the data, do we have the right policies, do we have the team to do it? Then, on the social-design side, we also have to ask, if we do build this, who’s going to use it? How are they going to use it? What’s the impact going to be? Is it the right problem to be solving? What are other people doing in this space? All of that requires a huge list of ingredients: data, data scientists, problem solvers, social experts, subject-matter experts, et cetera. It would be great if these lived in just one organization or two that we could bring together, but they tend to live all across the board.

“If we do build this, who’s going to use it? How are they going to use it? What’s the impact going to be? Is it the right problem to be solving?”

What makes our job fun and interesting is it requires scouring, and then making a call—like to the Avengers, where all the superheroes come together. And then, of course, working to facilitate and translate between them, because they come from very different backgrounds. Helping them see what we’re building in the center is really kind of a trick. The biggest thing is focusing that collaboration around finding the right thing to do. Most people think the hard part is getting folks together, but actually facilitating enough of the conversation to design something is really the hardest part.

How do you go about facilitating that conversation—and what kinds of challenges do you need to address to make a project successful?

One thing that’s unique to the state that we’re in right now with advanced technology is that you need someone to imagine the art of the possible. Defining the problem is difficult, but once you do, being able to propose what machine learning or artificial intelligence (AI) could do to solve that problem requires someone who knows pretty well what is possible with the technology. Right now most folks, for very good reasons, are not versed in that.

Ninety-nine percent of folks come to us and say, “I would really like to use data to prove my impact to my funders.” That’s what they focus on—they have to. We have to come in and say, “What if, instead of convincing your funders that you’re really good at keeping homeless people off the streets, we could actually take one of your working interventions and predict how many people are going to need services tomorrow? Would your life look different if we were able to identify the highest-leveraged places to build new homeless shelters?” It’s a very eye-opening moment.

I would say that the vast majority of our success comes from working incredibly closely with organizations to think from a design perspective about what problems they have, and what they need solved that we can engineer solutions to. It’s surprising to most people who come through our doors, because they’re thinking, “I’m going to volunteer in data science, so what cool data science should I build?” or “I’m a social-sector organization, I have a bunch of data and tech problems, and the nerds will figure it out.” I think both of them are surprised that we pull them into the room and say, “What’s your theory of change? What are the big blockers? How does your culture work? What’s the process?”

“You need someone to imagine the art of the possible.”

Once that’s all clear, we’ll bring the conversation to, “Well, did you know that if you’re having trouble triaging people receiving your services, a machine learning algorithm could actually automate some of that for you, or help you identify who you need to focus on the most?” That’s the aha moment, but it takes a lot of design teams and design principles to get there.

New ideas on the horizon for data science weren’t evident even a few years ago. How has the field of civic tech evolved since DataKind began?

Two big changes come to mind—one on the tech side and one on the political side. On the tech side, the funniest thing has been a rebranding. Five years ago, big data and data science covered anything that had to do with numbers. It’s now sort of splitting in two. Do I want to analyze data in a spreadsheet? That’s what data science has become. And now the data science where computers can actually predict stuff and do neat things is, for better or for worse, being called AI in the public sphere. I see so many news articles: AI is fighting cancer, AI is driving our cars, and it’s funny, because the core principles behind that are just computers making decisions off of data, the same way that Netflix or Amazon offer recommendations to you. That’s the stuff we do. We really like to be in the science of helping computers predict things for people so they can do their work as a resource-constrained nonprofit much better, faster, stronger.

The other thing is, five years ago there was this great optimism that people were all going to use the internet well, and that we were on this great progressive arc toward equality. I don’t think people perceived the potential negatives of this, and the ways that it contributed to tribalism, incredible hatred, and the ability for folks to entrench themselves in some pretty negative and caustic circles that had ramifications in the real world. We now see the double edges of technology—the sunlight is glinting into our eyes, and that’s painful.

I think we’re in a tough spot, because technology is great for automation—it takes something that you do well and helps you do it better. It doesn’t usually invent dramatically new ways of being. Issues of human bias, and trust, and empathy—I don’t think we know how to solve with technology. Not to say that some people aren’t trying.

That resonates a great deal with me. There is a wonderful quote from Edward R. Murrow when television was first being introduced: “This instrument can teach, it can illuminate; yes, and even it can inspire. But it can do so only to the extent that humans are determined to use it to those ends. Otherwise, it’s nothing but wires and lights in a box.” We can use technology for good or bad—it’s up to us. When you think about the art of the possible and your complex work, how do you see the potential for influencing democracy?

We did an open call for groups that are broadly strengthening democratic institutions, and we found there are a few places where machine learning or AI can probably help people out a lot. One is around government transparency. So much of the government transparency work right now is around desperately saving data that is being deleted, tracking the multitude of changes that are being made to government websites. One of the hardest things about being a watchdog is actually being able to watch everything, and computers are awesome at continuing to watch lots of things at scale.

“Technology is great for automation—it takes something that you do well and helps you do it better. It doesn’t usually invent dramatically new ways of being. Issues of human bias, and trust, and empathy—I don’t think we know how to solve with technology.”

We’ve actually found that computers can help automatically triage all of the Freedom of Information Act (FOIA) requests that people have been making. A journalist gets a FOIA request, but where does the data go? Having a system that can coordinate all the FOIA requests, keep a library of them, and help journalists find other FOIA requests that have already been delivered is hugely valuable. There’s a lot of work in that space, where the tools of data science and computing can help people do their jobs better, and be able to react to this incredibly dynamic space.

Another thing is helping folks think more about online action, for lack of a better way of saying it. The Southern Poverty Law Center is interested in how information is being disseminated, especially hateful information, and there are just so many different ways people do this. Now there’s social media, there are podcasts, there’s video, there are all these message boards. How do you keep track of it all and understand even what’s happening, much less try to say something smart about it? That seemed like another piece of low-hanging fruit where technology could help.

Do you have a seed of a new idea that you think could be transformative?

I think climate change and water are the most critical existential threats right now. Everything gets worse when your natural resources are under threat, and there is such huge potential to at least become adaptive. I don’t have the hopes that we’re going to convince everyone that climate change needs to be acted on. Until we do, someone needs to step in and ask, how will we relocate people? If sea levels rise, how will we keep clean water and food moving to people? That is a place where technology has a huge role to play. It’s something we have done very little on, but it gets me up in the morning—I’m going to focus more on it.

Anything else you want to add?

If you’re someone who wants to be a network weaver or is a technologist or a social organization, if you want to see machine learning and artificial intelligence used toward social impact, come get involved. We always need more people thinking in a design-centered way about how this technology can make a difference, so come aboard. We’re your boat.