Stories

Key Learnings from the Dark Patterns Tip Line

Stephanie Nguyen, 2020 Consumer Reports Civic Science Fellow, shares insights from the research initiative she led to help the public understand—and avoid—deceptive design tactics.

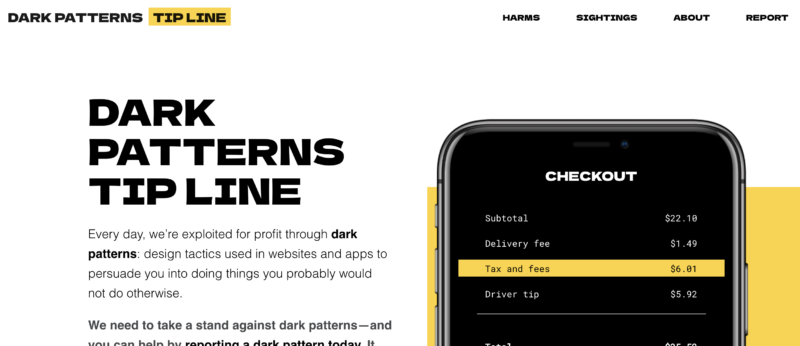

Dark patterns are design tactics used in websites and apps to persuade you to do things you probably would not do otherwise. Over the past several months, I’ve led the Dark Patterns Tip Line as part of Rita Allen Foundation’s Civic Science Fellowship with Consumer Reports. Through this initiative, we engaged and encouraged people to submit their stories of dark patterns, informing the public on how to avoid deceptive design tactics they may encounter while using online products and services, and empowering policymakers, enforcers, and researchers with evidence that illustrates the real-life harms of deception online.

“Having more data for research is meaningful, but most importantly, we want to make sure it actually helps protect people against predatory behaviors.”

Through this research initiative, we convened a multidisciplinary team of designers, technologists, researchers, and advocacy members. The tip line aims to provide insights that can help regulators and policymakers better act upon and safeguard people against these harms, but does not provide direct legal help. On the site, we encourage consumers to contact their state Attorney General if they see a pattern that they believe violates statutes against unfair, misleading, and deceptive acts and practices.

During a three-week window, we collected hundreds of digital dark patterns, ranging from roundabout ways to unsubscribe from gym memberships to hidden fees in online retail shopping. We analyzed and vetted the dark pattern submissions before publication, ensuring that they related to our collective definition of dark patterns, included an image or visual that verified the dark pattern, and that the images didn’t include any personally identifiable information.

Several key findings and themes emerged from the submitted dark patterns and our overall participatory design process:

(1) Understand how people experience harm. “I felt tricked” is the most commonly reported harm. “Being tricked” may imply that the harm is insignificant or temporary. However, many of the examples also led to unknown recurring fees, accidentally sharing a person’s full phone address book, or enabling public-by-default options—all of which could lead to longer-term financial or personal privacy loss. “I was denied choice, lost money, lost privacy or had my time wasted” were also commonly reported categories. In future iterations, it would be helpful to expand the harm types to ensure we are including the types of burdens that are being inflicted upon consumers. “I experienced discrimination” was the rarest reported harm found in our dataset, but that does not mean those harms do not exist in the scope of dark patterns. It is often not immediately obvious that elements of technology design can cause systemic discrimination or prevent someone from access to an opportunity.

(2) Articulate how people understand dark patterns, in their own words. The tip line was designed to give users autonomy to define what harm the dark pattern caused—most importantly in their own words. The focus of these findings is more on how users classified how they were negatively impacted as opposed to only the dark pattern tactic employed in the submission. Many submissions that were not published included scam or fraudulent businesses—where, for example, a company may send emails of “high importance” claiming the user has been “selected to receive an exclusive reward” and must give personal information in order to receive it. We also saw dark patterns that reflected a company’s business model. For example, one person described using a job website to seek a housekeeper. “They send you an email saying someone has applied to your job, but the only way you can view the application is if you sign up for a monthly subscription.”

(3) Institute participatory design methods with a focus on protecting consumers from harm. We designed this project to draw from a diversity of perspectives and expertise, including researchers, technologists, designers, and advocacy members. We conducted one-on-one interviews with people who have experienced harm from manipulative patterns in technology to build the framing, language, and strategy of the tool. We used those insights to create the language used to describe harms people faced through deceptive products and services online, but there is more to improve to better understand how harms manifest through products and services. More usability testing can and should be done. On governance and decision-making, there was a thorough review of each proposed modification. In the future, it is critical we continue to be proactive and use the tip line as an early warning system of potentially larger system-wide harms—as opposed to waiting for submissions to validate that harms are happening.

(4) There are limitations of this project and much more work to do. We created the first pass of harm labels by conducting user interviews with community members to better understand the types of dark pattern harms they are experiencing in their own words. While this was informed directly by research, the sample sizes were limited. Also, the dark patterns term can be problematic—both in terms of the connotations and the unfamiliarity we faced from many people we interviewed. It is impossible to contribute to the tip line if someone doesn’t know what a dark pattern is. The harm labels and language can and must be expanded and refined to meet people where they are in terms of understanding.

People who were the most likely and willing to submit dark patterns to the tip line may have been more plugged into conversations about dark patterns because the online submission process requires baseline tech savvy. To improve the diversity of our audience, we engaged with advocacy organizations with built-in communities, including Access Now, Consumer Reports, EFF, and PEN America. However, future work can and should be done to engage directly with community-based organizations and overburdened, underserved communities of color to gather more insights about how technology design could be deceptive in their lives. Geographically and culturally, this experiment was focused on examples from the United States and therefore lacks examples internationally. We also limited the submission timeframe to approximately a month between May and June. It is possible that patterns can fluctuate by time of year.

Having more data for research is meaningful, but most importantly, we want to make sure it actually helps protect people against predatory behaviors. It is imperative that researchers and practitioners use dark pattern data to take action. We can cross-reference this data with existing datasets that have direct overlap with the tip line. Many are publicly available and have been documenting dark pattern harms over several years. The Consumer Financial Protection Bureau, Federal Trade Commission, and Federal Communications Commission all have various public databases and information that researchers can use to learn about how companies are harming people through deceptive designs. Additionally, Princeton released a database of dark patterns culled from shopping websites. Darkpatterns.org just released a curated list of dark patterns on Airtable. There are public forums like Twitter’s #DarkPatterns or Reddit’s r/asshole design, which can also serve as inputs.

Dark patterns are a symptom of larger issues with business models based on unfettered data collection. We need legislation and regulation aimed to stop (or curb) these practices and change business models. This information will allow us to draw more parallels between dark pattern tactics and the types of harms that may occur, and it can inform legislation and regulatory efforts. We can and should act now. As I mentioned in an FTC workshop panel on dark patterns, regulators and policymakers already have many tools and information to begin enforcement against these predatory behaviors. While this project has limitations, there are opportunities to bridge future efforts. Researchers play a critical role in surfacing these trends, but must work closely with regulators to ensure these insights are actionable.

Next steps. We have learned a lot during the first phase of the project. Moving forward, the tip line will transition to the Digital Civil Society Lab at Stanford Center on Philanthropy and Civil Society (PACS), a global interdisciplinary research center that develops and shares knowledge to improve philanthropy, strengthen civil society, and address societal challenges. Stanford PACS informs policy and social innovation, philanthropic investment, and nonprofit practice, and they have made a commitment to work closely with policymakers and regulators to ensure this work can be used to better safeguard consumers. The Lab will focus on using the tip line as a research and teaching tool to be supported and cultivated by a community of students and researchers. It will be used to engage students and faculty from multiple-disciplines and universities as well as community members and community-based organizations. There will be a steady stream of engaged learners who are helping to parse and make sense of the data for others to use for impact.

If you have any questions or would like to follow up to learn more or engage with the tip line, please email Lucy Bernholz at bernholz@stanford.edu.

—

Many thanks to the community of academic researchers, technologists, designers, advocates, and more who have been supportive of the tip line and helped with the first phase of the project, which is now complete. This work has a stable foundation and valuable learnings with the help of the individuals who dedicated time to the website strategy and launch of this project—in alphabetical order by last name: Matt Bailey, Harry Brignull, Jennifer Brody, Justin Brookman, Sage Cheng, Amira Dhalla, Dennis Jen, Maureen Mahoney, Arunesh Mathur, Katie McInnis, Jasmine McNealy Shirin Mori and Ben Moskowitz. The Digital Lab at Consumer Reports helped incubate the site during this experimental phase. We also thank the organizations who helped share the initiative upon launch: AccessNow, Consumer Reports, Darkpatterns.org, EFF, and PEN America.

Stephanie T. Nguyen is a technologist and investigative researcher focused on the intersections of human-centered design, public interest technology, and consumer protection.